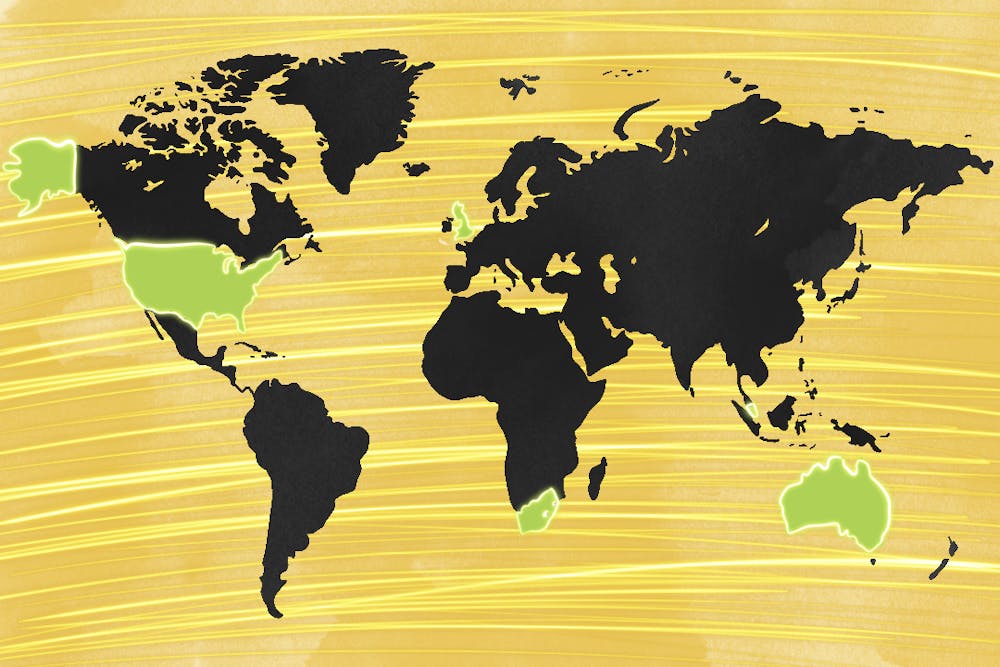

Early this summer, I was privileged to have received an email inviting me to speak at an international webinar focused on generative AI. Olivia Inwood, an academic literacy coordinator at Western Sydney University and the organizer of the event, invited speakers from around the globe, capturing diverse perspectives across many disciplines of study.

The webinar, titled "Grading the Graders: Global Perspectives on How Universities Have Reacted to Generative AI," took place over Zoom on July 11. Inwood moderated the six talks as well as the continuous discussion happening in the chat box.

My talk featured the recent work of State Press reporters, editors and artists to help tell the story of ASU’s growing relationship with generative AI. I highlighted some growing concerns among students, such as false flags of plagiarism and unassessed risks. It was fascinating to see these issues echoed between multiple speakers.

READ MORE: Opinion: ASU should not be the testing ground for teaching counselors with AI

The discussions between each presentation were rich with interdisciplinary ideas, areas for deeper research, and most importantly, continued emphasis on the value of real human conversations.

Surveying Student AI Usage

Samantha Newell, a lecturer in psychology at the University of Adelaide, presented the findings of a survey led by herself and Sophie Dahlenburg. In it, 399 students across various Australian universities were asked about their usage and opinions of generative AI.

Newell found that while AI is certainly used to fabricate assignment work, the majority of students are using AI for brainstorming or editing purposes. Additionally, 80% of students reported concern about the value of their degrees.

"This is very compelling evidence that we need to be having these broader discussions like we're having today, and (try) to navigate this space together," said Newell in her talk.

Newell concluded her presentation with a discussion about the fear of being falsely accused of AI use. This fear has grown rapidly among students to the point where some online communities feature step-by-step guides on how to stay safe.

AI's effect on trust, especially among students, was a major point of discussion moving forward.

READ MORE: ASU leaders explain University’s relationship with OpenAI

Opportunities in Game Development

William Mabin, the founder of Toxyn Games, has lectured at various higher education institutions in South Africa. While unable to attend physically due to technical issues, Mabin’s presentation was played from a prerecorded video.

As a mentor for game developers and designers, Mabin works firsthand with new and upcoming software. He said he sees the potential for AI to help with tedious tasks such as 3D modeling and coding; he also disclosed that AI helped him brainstorm his presentation.

"Of course, the main concern is always sustaining life. And the main question is, how complicit are we in AI replacing people's jobs?" said Mabin in his presentation. "How are we making ourselves redundant?"

The Artificial Void and AI Detector Bias

Oliver Cocker is a literature and law student at the University of Auckland, and an editor at their university’s publication, Craccum. In his presentation, he defined a cycle known as the "artificial void," where educators use AI to generate questions, students respond with AI-generated answers, and no one learns how to improve.

According to Cocker, mitigating the effects of this cycle could involve making assignments more creative, relating them to current events, or involving in-person engagement.

While AI detection software is growing in popularity as a solution, Cocker put its accuracy to the test. He submitted an old high school essay to a paid AI detection program five separate times to test their reported accuracy scores, changing only his last name at the top. A higher score meant the detector had assessed the text was more likely to have been written by a human.

The essays with the last names "Cocker" and "Sinclair" were both given originality scores of 85%, while "Temauri," "Abdullahi" and "Zhāng" were given scores of 81, 80 and 79%, respectively. When Cocker submitted an AI-rewritten version of the same essay, it was given an originality score of 90%.

As suggested by both Cocker and Newell, personal engagement is more reliable than software when preventing academic integrity violations.

Protesting Stolen Art and Efforts

Esther Bello, an abstract artist and PhD candidate in Arts and Computation Technology at Goldsmiths, University of London, said she is working to create her own original dataset of paintings, interviews and other materials, protesting generative AI programs that fail to label content with proper credits.

As Bello pointed out, the reduction of effort does not stop with the artists, it often includes those working behind the scenes to label the training data.

"Labeled data doesn’t just appear from anywhere. Anyone who works with data knows that data in its raw form is nothing," said Bello in her presentation. "It takes human effort to do that… but when you hear about a new AI tool that has come out … you just hear 'It was trained on data,' like the data fell from heaven."

Art vs Artist in the AI Space

Akshita Jain, a mechanical engineering student at the National University of Singapore, presented on the relationship between art and artists in the generative AI space, as well as the claim that AI art tools increase accessibility to create art.

"Why do people win prizes for typing a prompt into an image generator?" Jain asked during her talk. "What about AI art makes us artists? Does knowing anything about the person influence us to see the image in a different light?"

According to Jain, the unique personal and creative factors involved in creating great art are impossible for AI to replicate. While its use as a tool is undeniable, AI only increases accessibility to uninspired art.

As discussed throughout the webinar, generative AI often acts as a mirror of its creators, reflecting our own biases and errors right back at us. Tens of thousands of students are expected to have deeper access to generative AI once the semester starts in August; it is worth slowing down and considering AI's impact not only on our University, but across the entire world.

Editor's note: The opinions presented in this column are the author's and do not imply any endorsement from The State Press or its editors.

Want to join the conversation? Send an email to editor.statepress@gmail.com. Keep letters under 500 words, and include your university affiliation. Anonymity will not be granted.

Edited by Sophia Ramirez, Alysa Horton and Natalia Jarrett.

Reach the reporter at asgrazia@asu.edu and follow @emphasisonno on Twitter.

Like The State Press on Facebook and follow @statepress on Twitter.

River is a senior studying english and mechanical engineering. This is their fourth semester with The State Press.